Techspiration

Norris Professor Kimberly Acquaviva, appointed to be a faculty AI guide by the UVA provost’s office, thinks of Chat GPT like “a great office mate"

with often terrible ideas.

“It always has a lot to say,” added Acquaviva, “but sometimes it’s very, very wrong.”

With assistant professor Julie Roebuck (BSN ’98, MSN ’04, DNP ’20), Acquaviva heads up monthly faculty get-togethers that meet over lunch and with laptops to “play,” swap stories, and share generative AI lessons, fears, failures—and successes.

The gathering’s aim isn’t to compel faculty members to use AI but to “think about the pedagogical reasons why they might include or prohibit the use of AI,” explained Acquaviva, “and to teach people how to interrogate it, understand its power and limitations, and encourage them not to be scared or put off.”

“We are not pro- or anti- AI,” explained Acquaviva. “We’re pro-thinking about AI.”

In the classes she teaches, Acquaviva encourages students to use generative AI tools like UVA's Microsoft Copilot, and, like assistant professors Richard Ridge (PHD ’01) and Sharon Bragg (MSN’ 12, DNP ’17), she requires students to disclose when and how they use generative AI tools in completing their course work.

Other faculty members, like Shelly Smith (BSN ’99, DNP ’12) require students to use AI but, as part of that, offer built-in lessons and assignments that reveal its limitations.

Still others, like associate professor Ashley Hurst, who teaches ethics, expressly disallow AI, “due to the ethical and legal issues surrounding the development of the LLMs (large language models, the algorithms that undergird artificial intelligence),” such as “theft, copyright infringement, the promulgation of bias, and the detrimental impact AI data centers have on the environment.” In Hurst’s case, AI is useful as a springboard for discussions about equity and access.

“All of my classes focus on skill building related to research and writing,” explained Hurst. “Using AI LLM short-circuits this skill-building development. It also exacerbates social inequities amongst our students. Students who did not receive skill building opportunities in middle and high school research and writing and further disadvantaged when we skip over catching them up with their peers who were taught these important skills.”

Other faculty members use AI to buttress course and lesson planning, develop rubrics for grading student assignments, and brainstorm learning activities. Assistant professor Roebuck uses it to improve the look of her online presentations and course recordings. In her spring health policy course, Acquaviva creates weekly AI-generated podcasts with Google’s Notebook LM and offers students extra credit if they find and submit a list of errors (about 20 percent of each podcast, Acquaviva estimated, is not factual).

For some, AI remains a still cautiously used accelerant.

“The AI product is the start,” said professor Kathryn Reid (BSN ’84, MSN ’88, CERTI-FNP ’96), “that helps me launch into completing the work. I don’t take it and run with it—I have to examine, criticize, and adjust so that the work is mine and tailored to my purpose. I see AI as helping us be more efficient but not usurping all of the work that we are expected to do.”

AI isn’t the only new tech being tried. Assistant professor Emily Evans (PhD ’14) uses high-tech tools like Peerceptiv and Voicethread to enliven course assignment visuals and offer students a method to collaborate and assess one another’s work, while instructional designer William Canter teaches faculty how to use technology such as H5P, SpeedGrader, and Rubrics.

Canter, who meets one-on-one with faculty members to develop their teaching and technical skills, cautions them to remember that technology’s best used when there’s a compelling reason for it (a nod to goal 1, transforming educational offerings). “Don’t bring tech into the classroom unless you commit to doing it correctly and understand what its value-added is,” Canter advised, “because it all boils down to connecting as humans, showing our students our passion and vulnerability.

“Passion is contagious,” he added. “When students hear it, and it’s authentic, and they enjoy the material being presented, they’re inspired.”

Foundational Lessons

When students ask for help, how do we respond?

As the School bends and broadens its approach to nursing education, it upholds its core, those traits that have made it so strong for so long: how it centers and supports students.

Read More

New Tactics

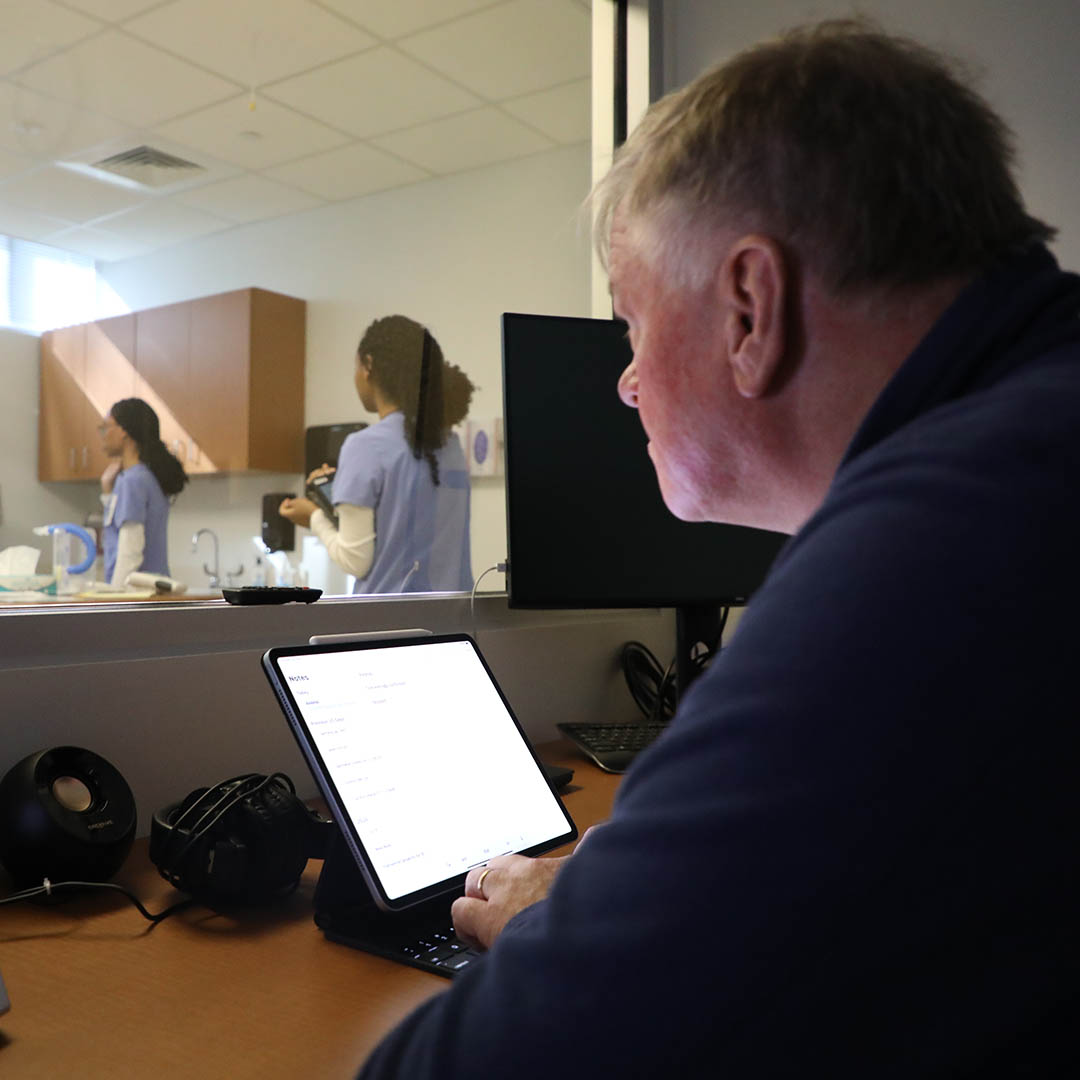

Becoming AI Curious

How clinical simulation educator James Nisley learned to lean on Chat GPT to flesh out practice scenarios that sing. He'll never go back.

Read More

Powered by Orange and Blue

Alumni raise their hands to serve

How the School is turning to alumni like advanced practice nurse Hania Aloul (MSN ’13) willing to nurture the next generation of "curious, energetic, sharp-witted" clinicians to "pay it forward."

Read More